Use any model, from any provider with just one API.

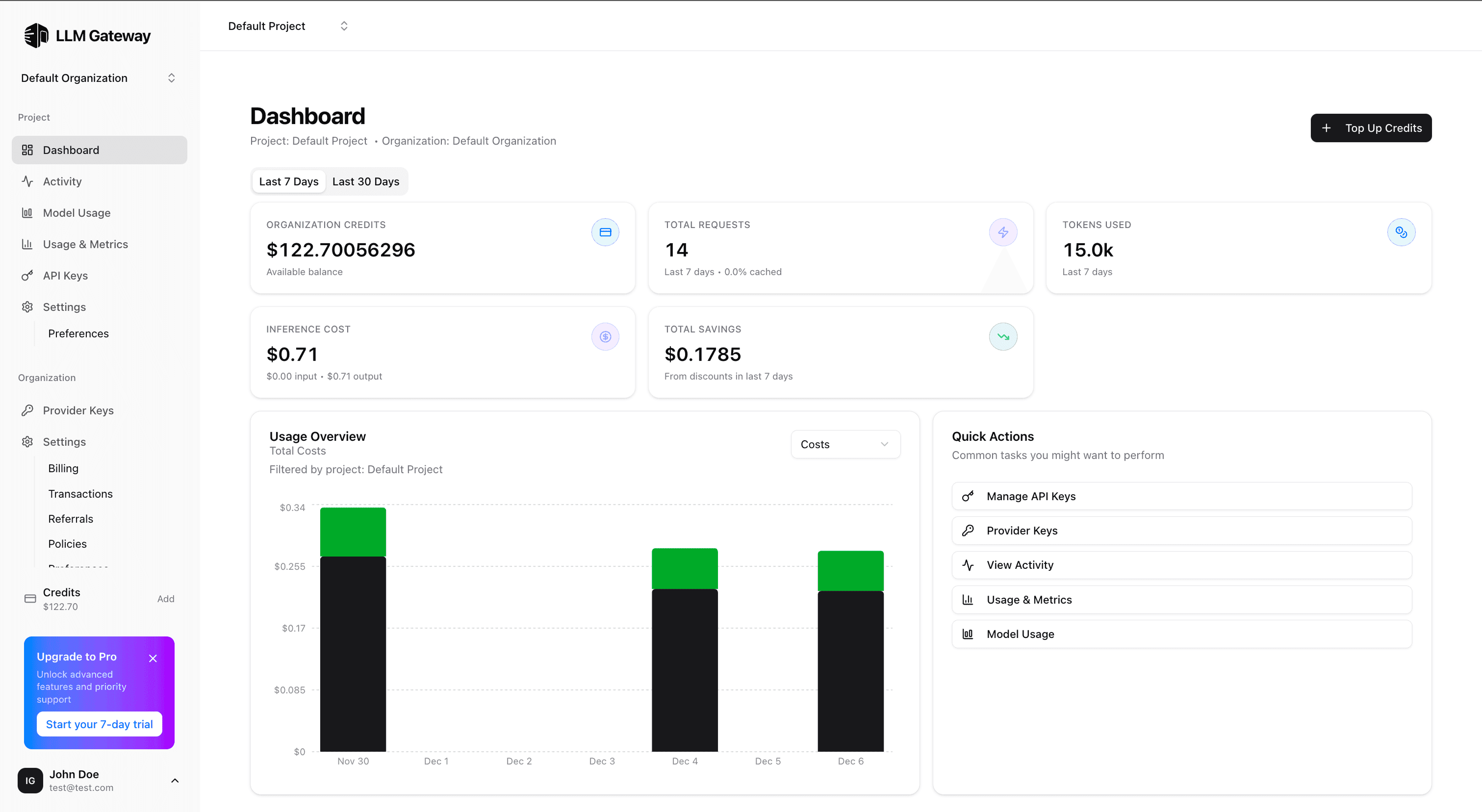

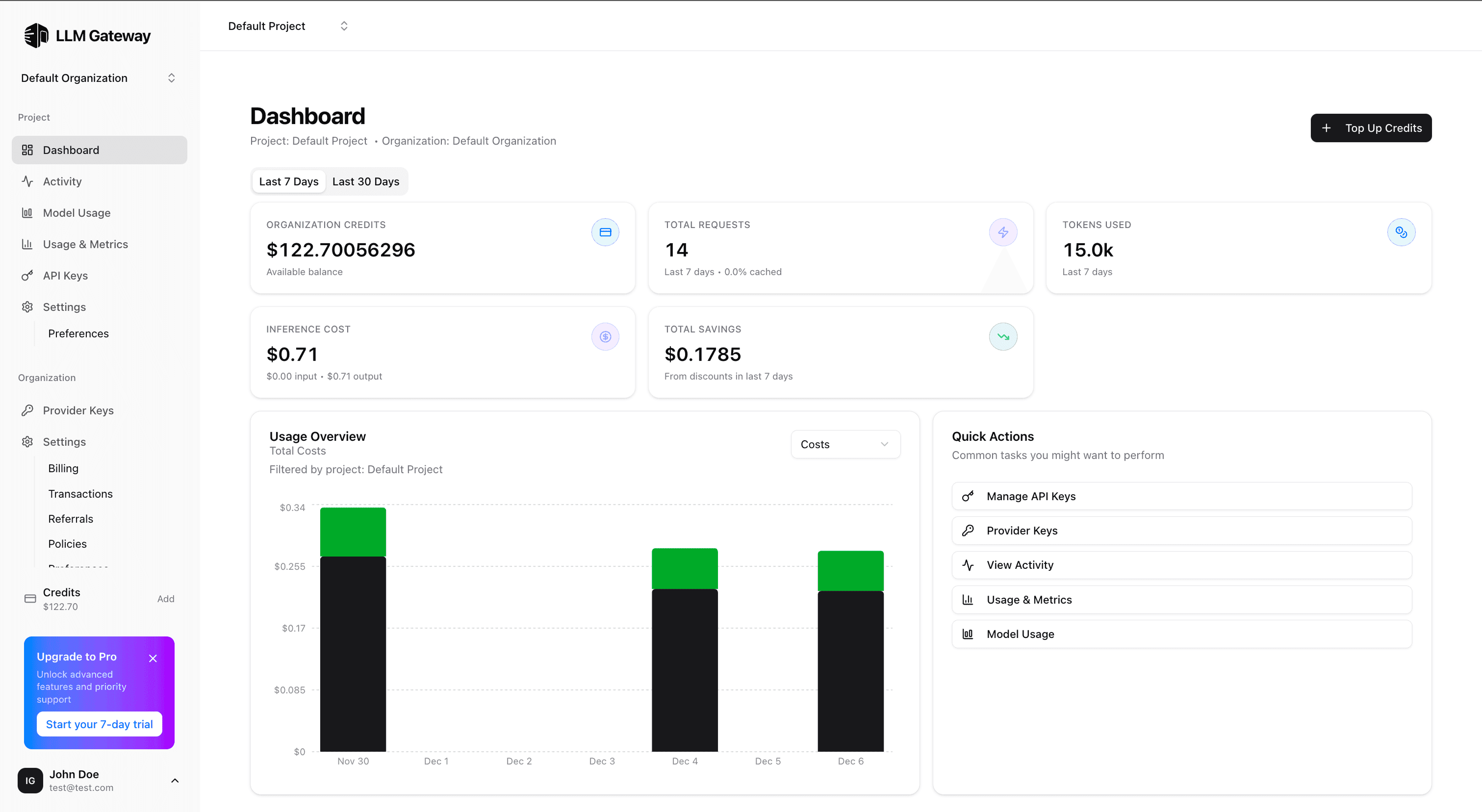

Route, manage, and analyze your LLM requests across multiple providers with one unified API interface.

Route, manage, and analyze your LLM requests across multiple providers with one unified API interface.

We dynamically route requests from devices to the optimal AI model—OpenAI, Anthropic, Google, and more.

Just change your API endpoint and keep your existing code. Works with any language or framework.

import openaiclient = openai.OpenAI(api_key="YOUR_LLM_API_KEY",base_url="https://internal.llmapi.ai/v1")response = client.chat.completions.create(model="gpt-4o",messages=[{"role": "user", "content": "Hello, how are you?"}])print(response.choices[0].message.content)

LLM API routes your request to the appropriate provider while tracking usage and performance across all languages and frameworks.

Start using LLM API today and take control of your AI infrastructure.